In today’s cloud-native landscape, where systems are no longer monolithic and communicate across networks and continuously deployment practices ships new versions frequently, understanding the fundamental assumptions that can lead to system failures is more critical than ever. The 8 Fallacies of Distributed Computing, originally formulated by Peter Deutsch and others at Sun Microsystems, remain as relevant today as when they were first articulated—perhaps even more so.

Modern cloud architectures, with their dynamic scaling, rolling deployments, and multi-region setups, mean that each time you call a service, you might be talking to a completely different node running a different version of its system. This reality makes it essential to design systems that can handle the inherent unreliability and variability of distributed environments.

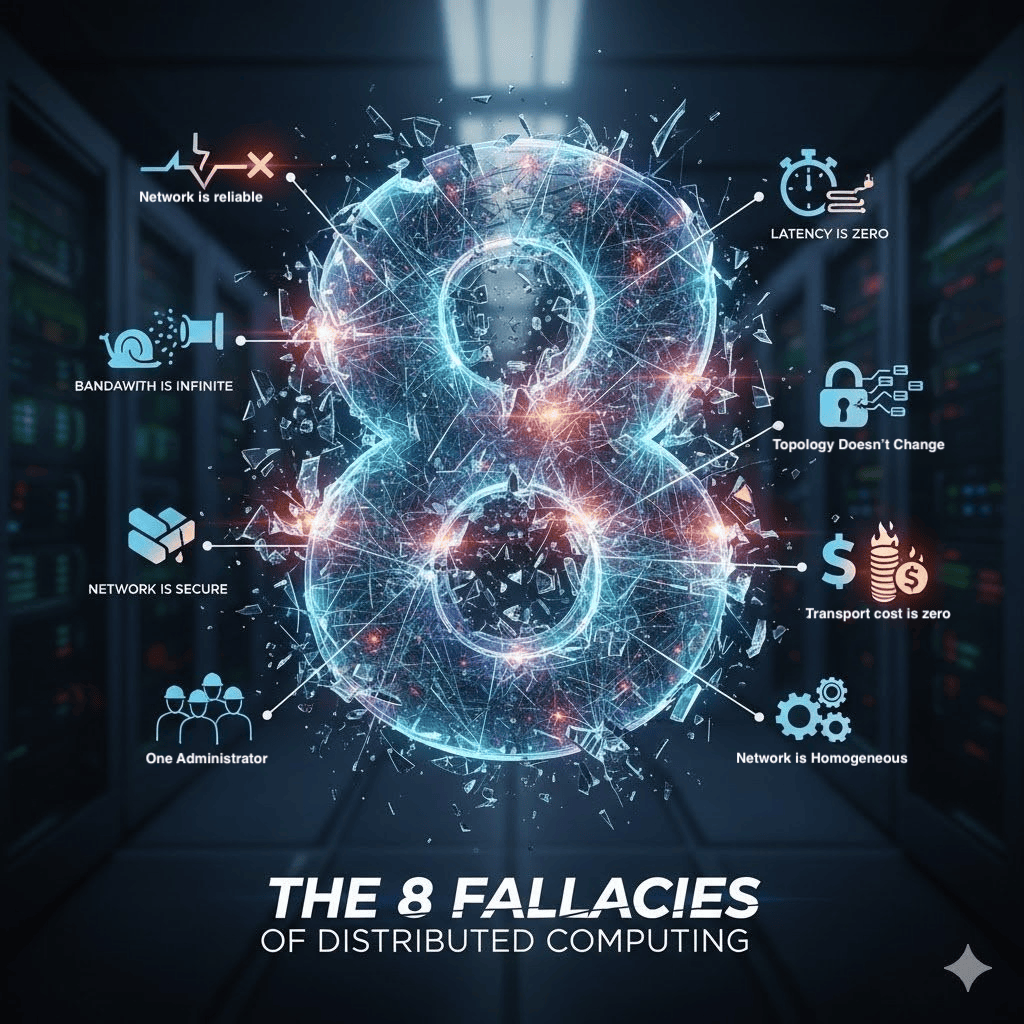

The 8 Fallacies Link to heading

1. The Network is Reliable Link to heading

The Assumption: Network connections will always work when you need them.

The Reality: Networks fail. Routers crash, cables get cut, Packets go missing, DNS servers go down, and cloud providers experience outages. In a distributed system, network failures are not exceptions—they’re expected events.

Why it matters: No-one offers a 100% up time, with services spread across multiple availability zones and regions, network partitions can isolate entire sections of your system. A service in one region might be completely unreachable from another, even though both are “up” and running.

Best Practices:

- Design for graceful degradation when dependencies are unavailable

- Implement circuit breakers to fail fast when services are unreachable

- Use retry policies with exponential backoff

2. Latency is Zero Link to heading

The Assumption: Network calls are instantaneous, just like local function calls.

The Reality: Every network call has latency. Dependencies may be under load or suffering from a noisy neighbor, Local network call can take milliseconds, while cross-region calls can take hundreds of milliseconds or more.

Why it matters: In today’s distributed systems architectures, a single user request might trigger dozens of service calls. If each call takes 50ms, your response time quickly becomes unacceptable.

Best Practices:

- Minimise the number of network calls in your critical path

- Use caching strategies to reduce remote calls

- Consider data locality and colocation of related services

3. Bandwidth is Infinite Link to heading

The Assumption: You can send as much data as you want over the network without concern.

The Reality: Network bandwidth is limited and shared. Sending large payloads can saturate network links and impact other services.

Why it matters: With containerised applications and frequent deployments, large images and data transfers can consume significant bandwidth. This becomes particularly problematic during rolling deployments when multiple nodes are pulling new images simultaneously.

Best Practices:

- Design APIs with pagination for large datasets

- Use compression for data transfer

- Consider data streaming for large payloads

- Implement proper resource quotas and limits

4. The Network is Secure Link to heading

The Assumption: Data transmitted over the network is safe from interception or tampering.

The Reality: Networks are inherently insecure. Data can be intercepted, modified, or replayed by malicious actors.

Why it matters: In cloud environments, data often crosses multiple networks and systems. With the rise of zero-trust architectures, every network call must be authenticated, authorised and audited. The dynamic nature of cloud deployments means that security policies must be enforced at the application level, not just the network level.

Best Practices:

- Never trust, always verify (zero-trust principle)

- Encrypt all data in transit using TLS

- Implement mutual TLS (mTLS) for service-to-service communication

- Use service mesh technologies for consistent security policies

5. Topology Doesn’t Change Link to heading

The Assumption: The network structure and available services remain constant.

The Reality: Network topologies change constantly. Services come and go, load balancers redirect traffic, and infrastructure is continuously updated.

Why it matters: In cloud environments with auto-scaling and rolling deployments, your service might be talking to different instances of the same service with each call. Service discovery becomes critical, and hardcoded endpoints are a recipe for disaster.

Best Practices:

- Design for stateless services that can be replaced at any time

- Use service discovery mechanisms

- Implement health checks and load balancing

- Use configuration management for dynamic endpoint resolution

6. There is One Administrator Link to heading

The Assumption: A single person or team controls the entire distributed system.

The Reality: Different teams manage different services, each with their own deployment schedules, monitoring, and operational procedures.

Why it matters: In distributed systems architectures, each service might be owned by a different team with different release cycles. This makes coordination challenging and increases the likelihood of version mismatches and integration issues.

Best Practices:

- Implement comprehensive API versioning strategies

- Use contract testing to ensure compatibility

- Establish clear service ownership and communication protocols

- Implement proper monitoring and alerting across service boundaries

7. Transport Cost is Zero Link to heading

The Assumption: Sending data over the network is free.

The Reality: Network operations have costs in terms of CPU, memory, bandwidth, and time. These costs compound quickly in distributed systems.

Why it matters: Cloud providers charge for data transfer, and inefficient network usage can significantly impact costs. With frequent deployments and scaling events, these costs can spiral out of control.

Best Practices:

- Monitor and optimise data transfer costs

- Use efficient serialisation formats

- Implement proper connection pooling

- Consider data locality and regional deployment strategies

8. The Network is Homogeneous Link to heading

The Assumption: All parts of the network have the same characteristics and capabilities.

The Reality: Networks are heterogeneous, with different latencies, bandwidths, and reliability characteristics across different segments.

Why it matters: In cloud environments, different regions have different performance characteristics. A service deployed in one region might perform differently than the same service in another region, even with identical code.

Best Practices:

- Test your applications across different network conditions

- Implement adaptive algorithms that can handle varying network characteristics

- Use monitoring to understand actual network performance

- Design for the weakest network link in your system

Why These Fallacies Matter More Than Ever Link to heading

In today’s cloud-native, CI/CD-driven world, these fallacies are not just academic concepts—they’re daily realities that can make or break your system. With continuous deployment, your system is in a constant state of change. Each service call might hit a different version of your application, running on different infrastructure, with different performance characteristics.

The dynamic nature of modern cloud deployments means that the assumptions underlying these fallacies are violated more frequently and more dramatically than ever before. Understanding and designing for these realities is not optional—it’s essential for building resilient, scalable systems.

Conclusion Link to heading

The 8 Fallacies of Distributed Computing serve as a crucial reminder that distributed systems are fundamentally different from monolithic applications. In today’s cloud landscape, where services are constantly being deployed, scaled, and updated, these fallacies are more relevant than ever. By acknowledging these realities and designing your systems accordingly, you can build applications that are resilient, scalable, and capable of thriving in the dynamic, ever-changing world of distributed computing.

Remember: in distributed systems, failure is not an exception—it’s a feature. Design for it, test for it, and embrace it as part of your system’s normal operation.